We’re back at it with a brand new Brazilian Jiu Jitsu data collection process to lend more insights into how matches were scored and trends at the 2019 World Championships. This time, we used computer vision techniques such as video event detection and optical character recognition to help us collect the points scored in matches and the exact time each score occurred.

This blog is part of a larger project where my Crazy 88 teammate, Ashar Nadeem, and I are using a variety of data science tools to collect match data for BJJ. In this blog, we used 87 match videos posted on the International Brazilian Jiu Jitsu Federation YouTube channel from the 2019 World Championships. The matches included black belt male and female Quarterfinal, Semifinal, and Finals matches, arguably the most high stress and meaningful matches of the tournament.

This portion of the project was limited to score detection—the points scored and the exact time a score happened—by analyzing a change in the numbers on the scoreboard. A later analysis will look at the scoring positions themselves in more depth.

This project was two-fold:

1. We wrote a program to detect an event in videos, namely when the numbers on the scoreboard changed, the program took a screen shot of the scoreboard as well as recorded a timestamp for when the score took place.

2. Next, we wrote another program that read the numbers on saved images, known as optical character recognition, and created a master dataset with all the matches, fighters, rounds of the tournament, timestamp for the event, and the actual scores.

Quick Summary:

• 1/4 of all matches ended with a 0-0 score

•

1/3

of matches ended with an even score, either a referees decision or submission

•

Most scores occurred in the first half of the match and decreased with time

• Scoring first and last mattered

Findings:

In fact, 24% ended with a 0-0 score, 8% ended with 2-2, and 5% with 4-4, indicating that fighters had a similar technical ability. The highest scoring match was between Lucas Lepri and John Combs which ended with a 45-0 victory for Lepri.

A quarter of the matches ended with one fighter having one advantage (1-0). In the Kennan Cornelius vs Nicholas Meregali, Meregali scored 10 advantages. 17% of matches ended with neither fighter having an advantage (0-0), relying on penalties. It was more common for the two fighters to have the same number of penalties, 1-1, 2-2, or 3-3, most likely for double pulls or stalling calls to both fighters.

For matches ending in a tie score (0-0, 2-2, 4-4, and 8-8), the advantages and penalties became the deciding factor. However, 25% of even scoring matches ended in 0-0 advantages, and 25% of those ended with 0-0 penalties. In a nut shell, there were a lot of referees decisions at the World Championships, and that number continues to rise year over year.

In 32% of matches, there was no point difference (zero) in the score and 2 points difference in 27% of matches. The most common final score at the tournament was 0-0,0-0,0-0.

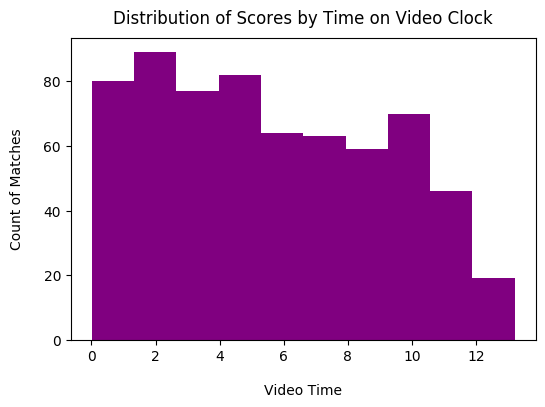

Scores by Time

When we plotted the times when scores occurred, we see that more scores took place at the beginning of the matches and decreased in frequency as the time on the clock counted down. Scoring in the first quarter of the match was arguably the most important.

The most common score in the first quarter was a single penalty, advantage, or double penalty for a double pull.

Furthermore, we saw that in the matches where one fighter scored first, 75% won the match. We also saw in matches where one fighter scored last, 87% won the match. In 70% of matches where a fighter scored first and last, they also won.

Methodology:

About Event Detection in Video

Finding an efficient yet simple solution to detect changes in the scoreboard proved to be a challenging endeavor. Traditional solutions include using a combination of artificial intelligence and natural language processing to monitor the scoreboard over time and log events as the score changes. However, these require a lot of development time to fine tune parameters and often end up with less than ideal accuracy. This is where OpenCV came in, an open source computer vision and machine learning software library.

We

used OpenCV to open and play each video while cropping out everything but the

scoreboard throughout the duration of the match. The script then recorded an

image for every second of the match and applied a function to compare each image

to its predecessor. The script assigned ‘points’ for how different images were,

with 0 being assigned to identical images. For the script to detect a number

changing on the scoreboard, the similarity threshold for our use case was 75,000, or the sweet spot for

identifying changes in points, advantages, and penalties. When a change in the

scoreboard was detected, the script would save that image and the previous

image would be deleted. Here are a few examples:

When the script would save an image, it named the image after the timestamp of the event in the video, allowing us to pinpoint when the score occurred in matches.

About Optical Character Recognition in Images

Once we had an image dataset, we needed to translate text on the image into numbers in a new dataset for analysis. The video names, as posted on YouTube, gave us the fighter names. Each image saved from the video event detection script was named after the timestamp in the video, which gave us the times of each score.

Next, we cropped

the scoreboard into four sections – the top score, bottom score, and the top and

bottom advantages and penalties. Each section required unique image data augmentation

in order to optimize the optical character recognition of the numbers. The

images went through various processes of resizing, grayscaling, thresholding, inverted

thresholding, and blurring to clean up the final image. Here are a few more examples:

Finally, we used a library called Pytesseract, a Python tool that accesses Google's mammoth out-of-the-box Tesseract-OCR Engine and “reads” the text embedded in the image. This script was run over each image and returned the numbers into the final dataset for scoreboard analysis.

With the above two scripts, we were able to collect a new dataset that can contribute to our understanding of the importance of scoring in matches at the IBJJF World Championships.

Next Steps:

Several project are underway, including a machine learning image classification project to determine the Jiu Jitsu position in images.

The event detection script was instrumental in documenting the time of the match that a score occurred. This data will be used in a future project to analyze how the scores occurred within various video segments.

Limitations:

The analysis

does not include the entire 2019 Worlds Championships; it is limited to the

black belt matches. Furthermore, it does not include the elimination rounds and

the analysis must be viewed within this context.

From the 87 matches, the event detection video analysis script generated 1,063 images. The scoreboard was confined to a segment of the video screen and resolution remained low. Attempts to resize the images and improve the resolution helped increase the data collection accuracy.

The OCR script was able to record a number 93-99% of the time in each of the four categories (the top score, bottom score, and the top and bottom advantages and penalties). The final score of each 87 match images were manually checked to confirm accuracy of the data collection. Not all 1,063 images were spot-checked for their accuracy due to time and resource limitations.

The final dataset was cleaned and wrangled to increase the overall quality of the data. For instance, a penalty can only be 0, 1, 2, or 3. One error detected was that the OCR occasionally recorded 11 or 13 instead of 0 due to the scoreboard cutting off the bottom of the number in the image. We were able to recognize and change the errors to the correct score after exploring the dataset.